ProxySQL is a powerful tool that relays traffic to multiple Mysql backends. Configuration can be set in proxysql.cnf that is loaded when the daemon starts. This is really nice from a Docker perspective. You change the file and redeploy with the new settings. Now, I need a ProxySQL Helm chart to deploy on my Kubernetes cluster.

Official ProxySQL Helm Chart

I tried to use the ProxySQL team helm chart but quickly ran into some issues.

The docker hub image is a bit outdated. The Helm chart does not seem to be maintained anymore while they had done a great job.

The configuration lies in proxysql.cnf. It’d be nice the file content would load from Values.yaml, making it dynamic for each deployment.

My setup uses mainly mysql_query_rules_fast_routing but here’s the problem: it cannot be configured through proxysql.cnf and ProxySQL developers made it clear they will not change this.

Docker Image with Mysql Client

ProxySQL docker comes without Mysql client probably to make the image size smaller. You will need it though to inject SQL queries locally when the pod starts up. The dockerfile is really easy but it forces you to maintain your own image.

FROM proxysql/proxysql:2.2.0

RUN apt update && apt -y install mysql-client && apt clean all

ENTRYPOINT ["proxysql", "-f", "-D", "/var/lib/proxysql"]

I made an image directly available on Dockerhub. It is referenced in the helm chart but you can build your own if you want to host it on a private repository.

mysql_query_rules_fast_routing Rules

I decided to make some changes on the Helm template so it can read SQL rules from the Value file, and load them into ProxySQL dynamically once the pod is up and running.

sqlqueries: |

--

SET mysql-have_ssl='true';

LOAD MYSQL VARIABLES TO RUNTIME;

SET admin-web_enabled='true';

LOAD ADMIN VARIABLES TO RUNTIME;

--

INSERT INTO mysql_servers (hostgroup_id,hostname) VALUES (0,'db1.domain.lan');

INSERT INTO mysql_servers (hostgroup_id,hostname) VALUES (1,'db2.domain.lan');

--

INSERT INTO mysql_users (username,password,use_ssl,default_hostgroup) VALUES ('proxy','*9EF51D21B4A3E7BC7A58925308F229CF4AEEC9E1',1,0);

--

INSERT INTO mysql_query_rules_fast_routing (username,schemaname,destination_hostgroup, comment) VALUES ('proxy','schema1',0,'');

INSERT INTO mysql_query_rules_fast_routing (username,schemaname,destination_hostgroup, comment) VALUES ('proxy','schema2',1,'');

--

LOAD MYSQL USERS TO RUNTIME;

LOAD MYSQL SERVERS TO RUNTIME;

LOAD MYSQL QUERY RULES TO RUNTIME;

SQL queries are added to the Values.yaml file, loaded into the configmap and mounted as a file in /docker-entrypoint-initdb.d/sqlqueries. We can then inject SQL queries once ProxySQL daemon is up and running. It is achieved with a poststart command that is launched right after the docker entrypoint’s been executed.

lifecycle:

postStart:

exec:

command: ["/bin/sh", "-c", "sleep 1 && mysql --show-warnings -uadmin -padmin -h127.0.0.1 -P{{ .Values.pod.adminPort }} < /docker-entrypoint-initdb.d/sqlqueries"]

Using the Helm Chart

The helm chart is available on github. Clone the repo, edit your own Values file based on example.yaml and deploy the Helm chart

helm install -n my-release -f myconf.yaml ./proxysql

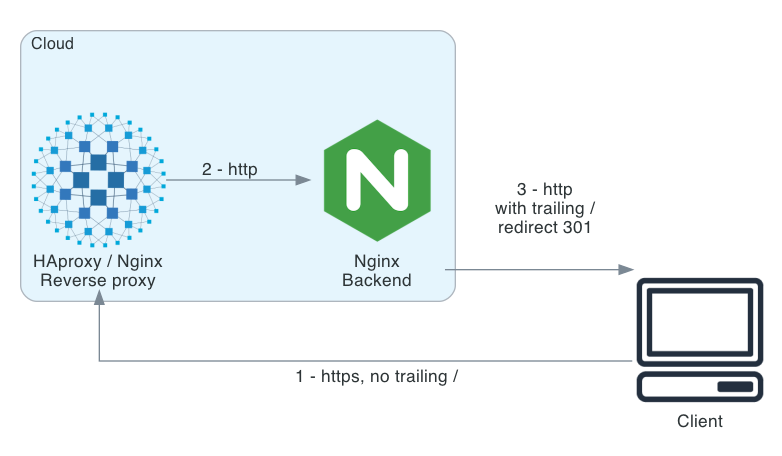

Read this post if you want to enable and reach the stats server through Kubernetes ingress rules.

Bring your Own Self Signed Cert

Proxysql 2.3.0 is now capable of reloading the SSL/TLS certificate on the fly.

Before that, you’d have to build your own proxysql container to embed the CA and Mysql certificate, making it static.

With this new feature, you just have to create a Kubernetes TLS secret with a ca.crt, tls.cert and key.crt that will be mounted by the ProxySQL Helm chart.

Uncomment the matching set of commands that will replace ProxySQL auto-generated certs with the Mysql SYSTEM instruction.

Tags: Docker, Kubernetes, Mysql, ProxySQL